Overview: Following my previous article — Scaling Layer 1: ZK-rollups and Sharding — I wanted to provide additional clarity on the importance of data availability and the nuances of ensuring data availability in a rollup-centric blockchain.

Understanding Data Availability

The key to understanding data availability is to start with the basics of block verification and security in a blockchain. Validators must follow a set of rules when producing a block. Blocks must only include valid transactions (i.e. transactions that lead to valid state changes) and include two pieces of data that allow the network to verify that this rule has been followed:

- Block header — Includes all the metadata of the block, e.g. previous block hash, merkle root of transactions, new state root, nonce, gas limit, gas used, etc.

- Block body — Includes all the transactions in a block and accounts for the majority of the block size

Once produced, a block is shared with all the nodes in the network and full nodes (which include non-block producing validators) are responsible for verifying the transactions in the block (along with various metadata verifications) — but how do they do this? Notice that the block header includes the new state root, which is the root hash of the updated state of the system after executing the transactions in the block. Since full nodes store the previous state and download the transactions in the published block, they can perform three crucial tasks: 1) check the validity of the transactions against their stored state, 2) recompute the new state root and verify that it matches the state root included in the block header, and 3) compute the new state (or parts of the state that the node needs).

The necessity of data availability for block verification is the reason that we don’t run into data availability problems (note — not true for light nodes) in a traditional monolithic blockchain. If a validator publishes a block and withholds some transaction data, the full nodes will recompute a different new state root (using the transactions that were made available) than the state root included in the block header, leading to rejection of the block and no update to the ledger. Therefore, all the transaction data must be made available to the network for full nodes to accept the block.

Side note — light nodes, who do not store state and usually only download the block header, can be convinced by block producers that all data has been published. Data availability sampling (DAS), explained in a rollup context below, can also be a mechanism for light clients on an L1 to verify data availability.

Data Availability in a Rollup-centric Blockchain

In a modular blockchain, a different mechanism to ensure data availability is necessary due to the decoupling of verification and data availability. Rollups bring verification of transactions and computation of new states off-chain but still need to post some transaction data on-chain to avoid data availability problems. ZK-rollups, specifically, post three primary pieces of data to the main-chain:

- Cryptographic commitment (the root hash) of the new state

- Cryptographic proof (e.g. a ZK-SNARK) which proves that the new state is the result of applying valid transactions to the previous state

- Small amount of data for every transaction in the batch in the form of calldata

The zero-knowledge nature of the cryptographic proof indisputably proves that the transactions and the state change are valid but reveals no information about the transactions themselves. Transaction data is no longer required for full nodes to actively verify transactions and recompute new state roots. However, if sufficient transaction data is not published to the main chain, nodes on the L1 cannot determine the current state of the rollup, which is usually only (but still crucially) necessary in certain scenarios. For example, if the data is not published and the rollup operator suddenly ceases operations, rollup users may be prevented from withdrawing to the main chain because nodes cannot calculate their current balances. Therefore, enough transaction data to recover the latest state of the rollup still needs to be published so that it can be downloaded if needed. While rollups cannot steal users’ funds due to the incorruptibility of the proof system, data availability is still required to ensure users’ have access to their accounts in these cases.

But how do full nodes ensure that the rollup posts all the transaction data? Since full nodes are no longer computing state changes (for the rollup at least), there is no way for them to know that the rollup withheld even a single transaction without downloading the data and recomputing the state change. That solution would require full nodes to keep up with the rollup’s rate of transaction computation, which defeats the point of moving execution off-chain. In a modular blockchain architecture, we need an efficient way to solve for data availability.

Solving the Problem with DAS and Erasure Coding

The most efficient (and throughput maximizing) way to ensure data availability is through erasure coding and data availability sampling. Erasure coding introduces redundancy into the posted transaction data which ensures that the entire set of data can be recovered using only 50% of the data. If a rollup wants to withhold even 1% of the data, the operator must withhold 50%+ of the data. For this reason, nodes now only need to sample parts of the data to be probabilistically guaranteed that all the data is available to the network. In fact, sampling seven chunks of data is sufficient to have a 99% chance to detect an unavailable rollup block and reject it.

This solution allows blockchains to scale via rollups without sacrificing security and decentralization. An equally important but nuanced result of this solution is that it also inverts the traditional relationship between decentralization and scalability. To reach a certain threshold of probabilistic guarantees that data is available, nodes need to sample the transaction data a certain number of times. When we introduced more nodes to the network, each node needs to sample a block fewer times (or sample a larger block the same number of times), allowing the blockchain to scale with decentralization while maintaining the same level of security.

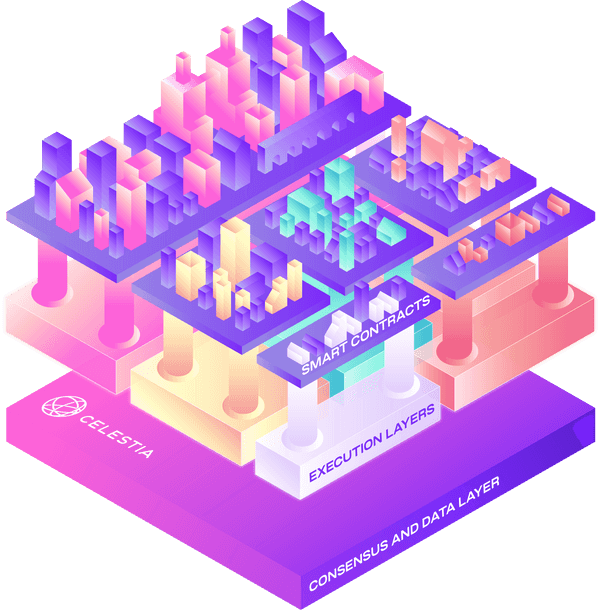

DA-Only Layers

With the sharding rollout, Ethereum is pivoting to provide primarily a data availability and consensus layer for rollups. Although decisions around the roles of shards are still pending, a few shards will likely maintain execution ability while the remainder will function as data depots. DA-only blockchains, like Celestia, remove execution entirely at the base layer and provide only consensus and data availability — i.e. ordering transactions and guaranteeing their availability. Because these blockchains are built as DA-only layers from the ground up, they have a few crucial advantages over any blockchains that maintain even some execution capacity:

- Node requirements can be lower because execution capacity is not required; additionally, light nodes (e.g. nodes that do not store state) using DAS can provide nearly the same security as full nodes

- More nodes increase scalability due to the properties of DAS

- No execution environment means that the blockchain does not impose execution logic on data posted to the chain

The last point is key. No execution logic requirements means that, conceivably, you could have applications built for both EVM and non-EVM execution environments, executed on rollups, and all secured by the base consensus and DA-layer. Similarly, you could also drag and drop a wide variety of existing rollups built for various other blockchains execution environments. In a multi-chain world, DA-only layers present a wide-open design space.

Citations

- https://notes.ethereum.org/@vbuterin/data_sharding_roadmap

- https://vitalik.ca/general/2021/01/05/rollup.html

- https://coinyuppie.com/a-detailed-explanation-of-rollup-technology-applications-and-data/

- https://blog.polygon.technology/zk-and-the-future-of-ethereum-scaling/

- https://blog.polygon.technology/the-data-availability-problem-6b74b619ffcc/

- https://vitalik.ca/general/2021/04/07/sharding.html