A collectively owned generative AI model can be a beacon of decentralization, collaboration, and true ownership by creators.

A note about intended audience: This piece delves into some concepts in blockchains, machine learning, and AI, assuming a basic understanding of these areas. However, I've made an effort to simplify technical jargon and concepts and also provide hyperlinks for the non-technical reader. Regardless of your familiarity with these subjects, the piece aims to spark ideas and discussions around the collaborative possibilities between AI and creative endeavors. Whether you're deep in the technical world or simply intrigued by the potential of AI to transform creative practices, there's insight here for you.

0. Introduction

In the quest for innovation across art, media, and music, generative AI has emerged as a powerful force. Yet, this power is increasingly concentrated in the hands of a few — OpenAI, Microsoft, Google — shaping societal and cultural landscapes through their proprietary models. This centralization sparks concerns around ownership, privacy, and the equitable distribution of technology's benefits. My proposition? A collectively owned generative AI model can be a beacon of decentralization, collaboration, and true ownership by creators.

Let a Million Generative AI Models Bloom!

As an artist and technologist, I've observed the transformative impact of generative AI and its potential to democratize creative expression. Yet, the current landscape is dominated by large, closed-source models, limiting access and control for individual creators. One solution could be to foster a multitude of fine tuned generative AI models, owned and managed by the creators themselves. This not only challenges the status quo of centralization but also celebrates the diversity of creativity and innovation.

Why Collective Ownership Matters

Collective ownership of generative AI models empowers artists in several ways:

-

Control and Compensation: Imagine a creative ecosystem where artists maintain sovereignty over their data and creations, ensuring fair recognition and compensation. In this envisioned future, artists who contribute their works to a collectively owned model could see direct financial benefits, such as receiving a share of profits generated from the model's use, whether through subscriptions or other monetization strategies. This model not only facilitates an additional income stream but also contributes value to the creative ecosystem by embodying a collective point of view.

-

Collaborative Innovation: The introduction of advanced digital audio workstations (DAWs) and VST plugins revolutionized sound design, allowing artists to push the boundaries of music production. Similarly, the integration of MaxMSP with Ableton Live empowered musicians and visual artists to create bespoke plugins and immersive audio-visual performances. These technological advances underscore how collaboration, fueled by state-of-the-art tools, can drive innovation. By working together, artists leverage these technologies to explore new artistic and technological frontiers, enhancing both their collective creativity and individual expressions.

-

Accessibility for All: The challenge for many artists lies in navigating the barriers to accessing cutting-edge technology, whether due to cost, complexity, or both. In the same way ChatGPT democratized access to Large Language Models (LLMs), artistic-focused collective models aim to dismantle these barriers. This approach makes advanced tools, like bespoke AIs tailored for specific artistic styles, more accessible to every artist, regardless of their technical expertise or financial resources. It's a step towards an inclusive creative landscape where technology empowers all creators equally.

-

Creative Diversity: Just as the music world thrives on a wealth of genres and traditions, the diversity of collective artist-fine tuned models promises a rich tapestry of styles and perspectives. This abundance fosters a fertile ground for experimentation, leading to the emergence of new genres, styles, techniques, and models of monetization. By encouraging a broad spectrum of creative expressions, collectively owned models not only enrich the artistic community but also challenge and expand the collective imagination. The diversity of these models acts as a beacon, illuminating the myriad ways in which technology can enhance and amplify the creative voice of every artist.

A Vision for the Future

This piece extends an invitation to envision a future where generative AI is not only by and for the creators but also a gift they can offer to the world, reflecting the collective's aspirations and generosity. Drawing from a rich tapestry of ideas and the collective wisdom of the past, my goal is to spark discussions, inspire collaborations, and encourage the actualization of this vision. If this resonates with you, feel free to reach out through my socials (@markredito) or email.

I. Foundations for Collective AI Creation

Before delving into the mechanics of building a collectively owned generative AI model, it's essential to establish a solid foundation. This involves assembling the right mix of creative minds, selecting a suitable base model, and securing the necessary computational resources. Here's what we need:

-

Forming the Collective: A group of like-minded creators or artists who come together to build an AI model for their own purposes. They may share the model with others through open source or monetize it collectively. Each member has a stake in the model's success and control over its usage and development. This collective might choose to organize itself as a cooperative or a decentralized autonomous organization (DAO), with governance structures varying by size and ambition. What binds this collective is a unique shared aesthetic or style that sets them apart from others (“a secret sauce”), ensuring their AI model reflects their collective identity.

-

Choosing an Open Source Base Model: Leveraging pre-trained models like Stability AI’s Stable Diffusion or Meta AI’s Audiocraft saves time and resources, offering a solid foundation for visual or audio projects. Regardless of model purpose or architecture, it’s generally recommended to use open source (e.g., Apache 2.0, CC-by-4.0, MIT License) to allow for customization and fine-tuning. However, when aiming to monetize, it's essential to consult legal guidance to navigate copyright restrictions, commercial use limitations, and the nuances of open-source licenses (guide here). This ensures that the collective's efforts to develop and commercialize their model are legally sound and aligned with the licensing terms.

-

Securing Computational Resources: Training and running inference on a generative AI model requires computational power. For training smaller datasets, a high-end gaming GPU like an Nvidia RTX 4090 may suffice. However, larger projects may benefit from cloud-based GPU services such as Together.ai,Vast.ai, or Runpod, which provide access to more robust computing resources. While more powerful, these options also introduce costs related to data size, training duration, and model deployment.

II. Blueprint for Building a Collectively Owned AI Model

Formalizing the Collective

The foundation of a collectively owned AI model is a group of artists with a shared vision and aesthetic. This could be a group of musicians or visual artists, each bringing their unique style to the table. For instance, consider the surreal 2000-s era pop sound of "PC Music" or the manga-style illustrations of "Superani." These collectives stand out for their unique contributions to their respective fields with a defining collective style. Organizing as a DAO or cooperative could offer a structured approach to governance and decision-making, with blockchain technology providing a novel way to verify membership and manage contributions.

Gathering Data

With the collective in place, members contribute their creative outputs into a common data pool. For musicians building a generative audio model, it could look like stems, instrumentals, and mixes. For visual artists, it could look like image and video files. This data will be representative of the collective’s style and forms the foundation for the AI model to learn from. It's crucial that this data is well-organized and curated to ensure the model can learn effectively from it.

Attributing Data

Attributing contributions accurately is vital. Similar to how traditional music rights are managed (eg. Split sheets), data contributions could be tracked to ensure fair recognition and compensation. Blockchain technology might play a role here, allowing for transparent and automated attribution and rewards for contributors.

Pre-processing Data

Before training begins, the collected data needs to be adapted to fit the model's requirements. This might involve resizing images or formatting audio files, ensuring the data is in the best possible shape to teach the AI model the collective’s unique style.(See: Preprocessing)

Fine-tuning the Pre-trained Model

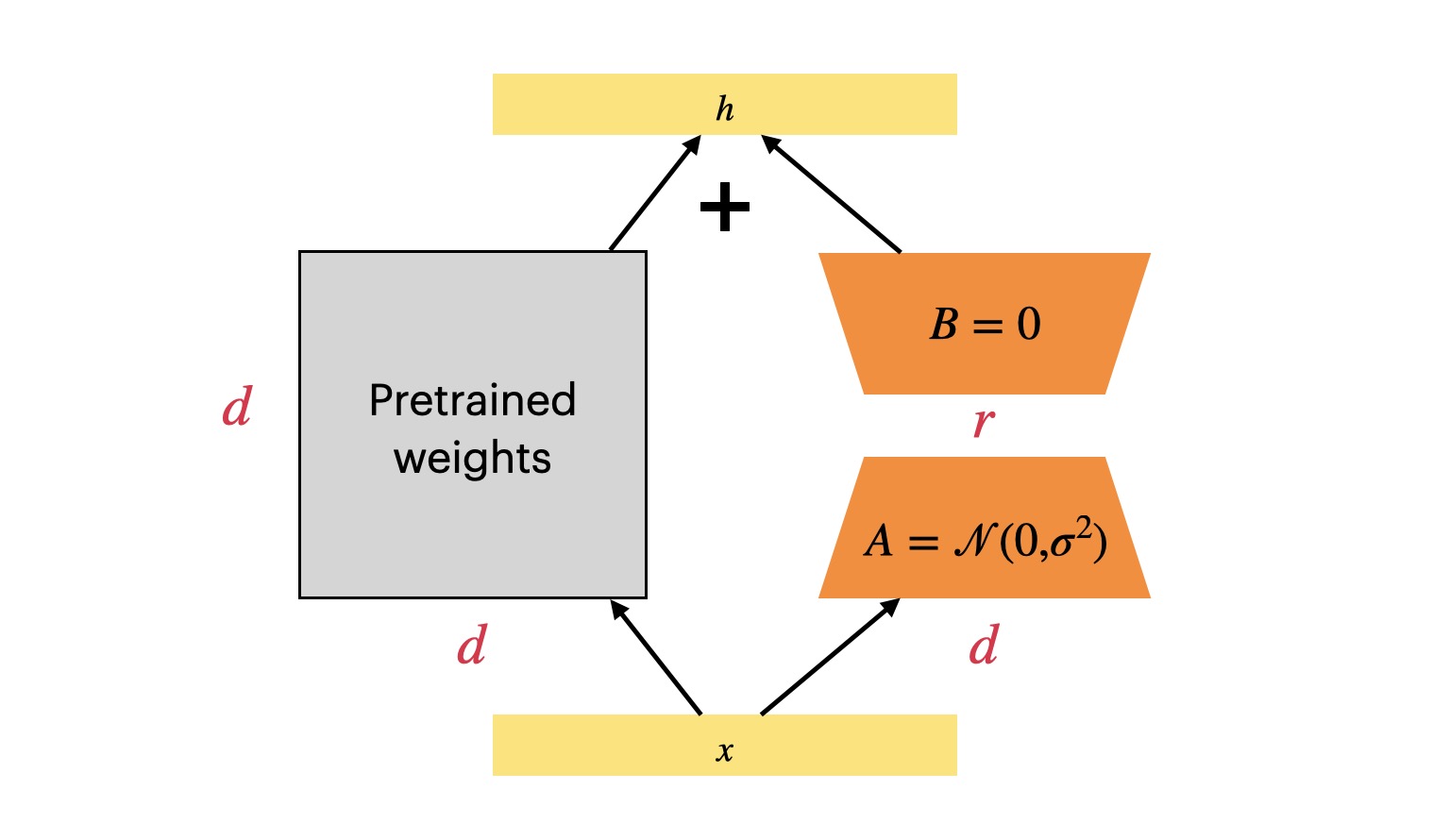

The actual training process involves adjusting a pre-existing AI model with the collective's data. Techniques such as Low Rank Adaptation (LoRA) allow for generative models to be fine-tuned in a computationally efficient way. This method fine-tunes only a small, critical part of an AI model, akin to carefully adjusting a few strings to tune a large harp, enhancing its performance without altering the entire instrument. This step is crucial for imbuing the model with the collective’s artistic essence while being resource efficient. This process would require careful calibration and possibly several iterations to refine the model's output to match the collective's vision.

Evaluation and Iteration

After training, the model undergoes a dual-phase evaluation: measuring its accuracy with quantitative metrics like loss—the difference between the model's predictions and the actual data—and assessing its artistic fidelity through qualitative analysis. Loss, a critical indicator, needs to be minimized to ensure the model's predictions closely mirror the target outputs, such as a 2-second music clip resembling the original dataset. For specialized models, additional metrics come into play: Inception Score (IS) and Frechet Inception Distance (FID) for images, or Mel Cepstral Distortion (MCD) and Kullback-Liebler divergence(KL) for audio, each offering nuanced insights into the model's performance in its domain. However, it's in the qualitative assessment where the model's true alignment with the collective’s vision shines—especially for audio models, where musicians judge if the generated music embodies their "collective sound." This phase hinges on subjective perception, inviting members to listen and decide if the output meets their standards of quality.

This iterative process, blending technical precision with artistic judgment, may require several rounds before the model faithfully reflects the collective's artistic intention and style. Beyond just listening, qualitative assessment could involve gathering feedback from a wider audience or conducting structured listening tests to ensure the model's outputs resonate on a broader scale. Balancing these objective and subjective evaluations guides the fine-tuning process, ensuring the final model not only performs accurately but also captures the essence of the collective’s creative vision.

Deploying the Model

Once satisfied with the model, the next step is making it accessible. The collective can decide if they want to use the model for themselves or share it widely to all. Options include platforms like Hugging Face or Replicate for broader access. The collective might also consider building a bespoke frontend/UI or an API to share and monetize the model. Using tokens (ERC20s) or NFTs to gate access to the model may also be an option if going the blockchain route.

Continuous Evolution

The world of art is ever-evolving, and so too must the AI model. Regular updates and refinements will be necessary to keep the model relevant and reflective of the collective’s current artistic direction. This includes accommodating changes in membership and evolving artistic styles, ensuring the model remains a true representation of the collective's creative output.

III. Challenges and Open Questions in Collective AI Ownership

Who owns the IP of generated works?

In the United States, AI-generated art, such as that created by Midjourney, is not eligible for copyright protection. This is because the Copyright Office has determined that only images created by humans can be copyrighted. This ruling applies to all generative models available on the market, although their copyright policies may vary, with some allowing full commercial use and others being more restrictive.

When it comes to music and audio generated by AI, the situation is less clear due to the complexity of traditional music rights. It is uncertain who truly owns the output of bespoke generative models. One potential solution is for collectives, with the guidance of legal experts, to draft their own policies regarding the usage and rights of generated content.

Legal Uncertainty in Model Ownership

AI/ML models are more nuanced than traditional software, as they involve both structured work (architecture and training regimen) and general content (trained weights and data). The ownership of these models are not clearly defined and could be bound by multiple licenses for different domains. Without legal precedent and clear guidance, it might be difficult to determine the ownership of the model and how it can be monetized or leveraged. For a more comprehensive understanding of open source AI model licenses, refer to the "State of Open Source AI" book here.

Data Attribution in Generative AI

Data attribution is crucial in generative AI, as it ensures that creators are properly credited and compensated for their contributions. Although research has been conducted on data attribution techniques (e.g. Evaluating Data Attribution for Text-to-Image Models (Aug 2023, Wang et. al) and Nightshade), it remains to be seen how these methods will respond in real-world situations or with complex datasets. Further experimentation is needed to fully understand their capabilities and limitations.

Blockchains

Blockchain technology presents a compelling solution for verifying the provenance of digital media and content. It has been effectively utilized in non-fungible tokens (NFTs), providing secure, cryptographically immutable metadata and proof of ownership. Beyond their primary function, NFTs have also been used to facilitate seamless split payments across different collaborators (eg. Splits), streamlining revenue sharing and ensuring fair compensation. Additionally, tokens can facilitate governance in DAOs (Decentralized Autonomous Organizations) through voting or as a means to signify membership. Despite its potential, there remains mixed sentiment about its use among the general public. Furthermore, the necessity to pay for executing actions—such as transaction fees, a crucial feature for network security—can be viewed as inconvenient and burdensome by some users. Enhancing the user experience and simplifying the onboarding process are critical steps needed to address these challenges and broaden blockchain's accessibility for data attribution and split payments. Experimental projects like Holly+ (and its DAO) and Botto provide glimpses into how decentralized AI with blockchain integration might evolve. As the technology continues to develop, we can anticipate further experimentation in this area.

Organizational Dynamics and DAOs

While DAOs and digital cooperatives provide a model for decentralized governance and collective decision-making, they also introduce governance complexities, especially when managing membership changes or IP rights. The departure of members or shifts in the collective's composition necessitates careful management of contributions and rights.

The Imperative for Continuous Improvement

The dynamic nature of art and technology means that a generative AI model must continually evolve. Regular updates and refinements, driven by both artistic growth and technological advancements, are crucial but can also impose financial and logistical burdens on the collective.

Technical Expertise and Accessibility

The technical demands of developing and maintaining a generative AI model can be a significant barrier. While not every artist needs to be a machine learning expert, a deeper understanding of the technology can unlock new creative possibilities. The sparsity of no-code/low-code solutions further exacerbates the challenge, making technical proficiency and potentially significant startup capital essential for success.

Concluding Thoughts: Toward a Future of Collective Creation

We’ve already seen creators leverage generative AI in their own work: from generating the initial stems for a song to creating concept art for a project, or even crafting drafts to build upon. These examples underscore how generative AI can significantly augment the creative process. Yet, there's a vast design space awaiting us—one where we can construct generative AI models that honor the unique voices and visions of a collective of creators. By transitioning from individual experimentation to models of collective ownership and creation, we're not just enhancing the creative process; we're advocating for a more democratic and equitable AI landscape. This could be a step towards broadening the benefits and innovations of AI, ensuring they reflect a multitude of perspectives and styles.

The potential for generative AI as a tool for collective empowerment and artistic collaboration is immense. Picture a platform where artists from diverse backgrounds can contribute their unique data, co-create models that truly represent their collective ethos, and then decide on how to share their creations with the world. Such a model doesn't just amplify individual creativity; it reinforces the entire creative community's fabric.

Addressing challenges is equally crucial. The path to collective ownership and creation is fraught with hurdles—be it navigating the complexities of data rights and model licensing or ensuring the inclusivity of voices within the AI creation process. Overcoming these challenges requires open dialogue, shared learning, and a commitment to developing frameworks that are both inclusive and adaptable.

While the steps I’ve outlined are continuously evolving, they offer foundational points for considering the future of generative AI in the arts and beyond. As we navigate this emerging landscape together, it’s essential to keep the dialogue open, share knowledge, and build frameworks that embrace adaptability and inclusiveness. The future of generative AI provides a canvas broad enough for all creators to leave their mark, enabling a previously unimaginable richness of expression. By focusing on collective ownership and collaborative creation, we chart a course toward a future where technology acts as a catalyst for artistic innovation and cultural enrichment, embodying the spirit of 'by the creators, for the creators—and shared with the world.' 🌀

If you are someone or know someone thinking or building along these lines, please reach out on my socials (@markredito) or email. I’m eager to discuss and exchange ideas on how we can expand the boundaries of generative AI together. Whether it's through sharing resources, brainstorming on potential collaborations, or simply navigating the challenges and opportunities of this space, your insights are invaluable.