Special thanks to @0xkrane, @atiselsts_eth, Avi from @nil_foundation, @ChundaMcCain, @IsdrsP, @Li_Steven1, Maki and @Phil_Kelly_NYC from @o1_labs and @yilongl_megaeth for their invaluable discussions, insights, and feedback on this article!

Introduction

Today’s decentralized apps face limitations in performing complex on-chain computations due to blockchain’s restricted processing capabilities. However, with the rapid development of technologies such as blockchain coprocessors, in conjunction with game theory and mechanism design, a new wave of use cases emerge to greatly improve user experience.

This article explores the design space of coprocessors, with a focus on potential use cases they empower.

Key takeaways:

-

Blockchain computation is expensive and limited; one solution is to move computation off-chain and verify the results on-chain through coprocessors, enabling more complex dapp logics.

-

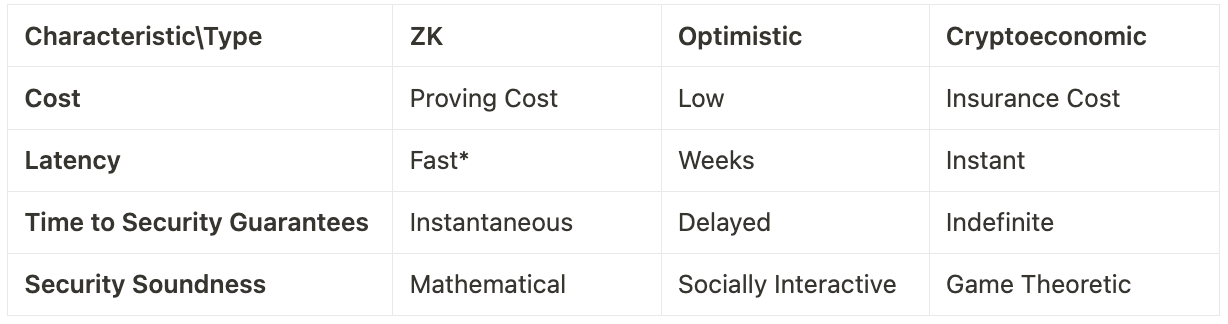

Coprocessors can be categorized into trustless (ZK), trust-minimized (MPC/TEE), optimistic, and cryptoeconomic based on their security assumptions. These solutions could also be combined to achieve desired security vs efficiency tradeoff.

-

Different types of coprocessors are suited for different tasks in DeFi. Potential use cases cover DEX (AMM & orderbook), money markets, staking, restaking, etc.

-

With the rise of decentralized AI, together with coprocessors, we are entering a new era of “Intelligent DeFi”.

The Role of Coprocessors

Blockchain is commonly viewed as a general-purpose CPU virtual machine (VM) that may not be ideal for heavy computations. Tasks involving data-driven analysis and intensive computations often necessitate off-chain solutions. For instance, orderbook exchanges like dydx v3 utilize off-chain matching and risk engines running on centralized servers, with only fund settlements taking place on-chain.

In computing, coprocessors are introduced to assist processors in performing specific tasks, as indicated by the prefix 'co-'. For instance, GPUs serve as coprocessors for CPUs. They excel at handling parallel computations required for tasks like 3D rendering and deep learning. This arrangement allows the primary CPU to concentrate on general-purpose processing. The coprocessor model has empowered computers to handle more complex workloads that would not have been feasible with a single, all-purpose CPU.

By leveraging coprocessors and accessing on-chain data, blockchain applications can potentially provide advanced features and make informed decisions. This creates opportunities for conducting additional computations, enabling the performance of more complex tasks and allowing applications to become more "intelligent".

Different Types of Coprocessors

Based on trust assumptions, coprocessors could be classified into mainly three different types- Zero-Knowledge (ZK), Optimistic, and Cryptoeconomic.

ZK coprocessors, if implemented correctly, are theoretically trustless. They perform off-chain computations and submit on-chain proofs for verification. While they provide speed, there is a trade-off in terms of proving cost. As custom hardware advances and cryptography develops, the final cost passed on to end-consumers could potentially be reduced to a more acceptable level.

Axiom and RISC Zero Bonsai are examples of ZK coprocessors. They allow arbitrary computation with access to the on-chain state to be run off-chain and provide proofs that the computation was performed.

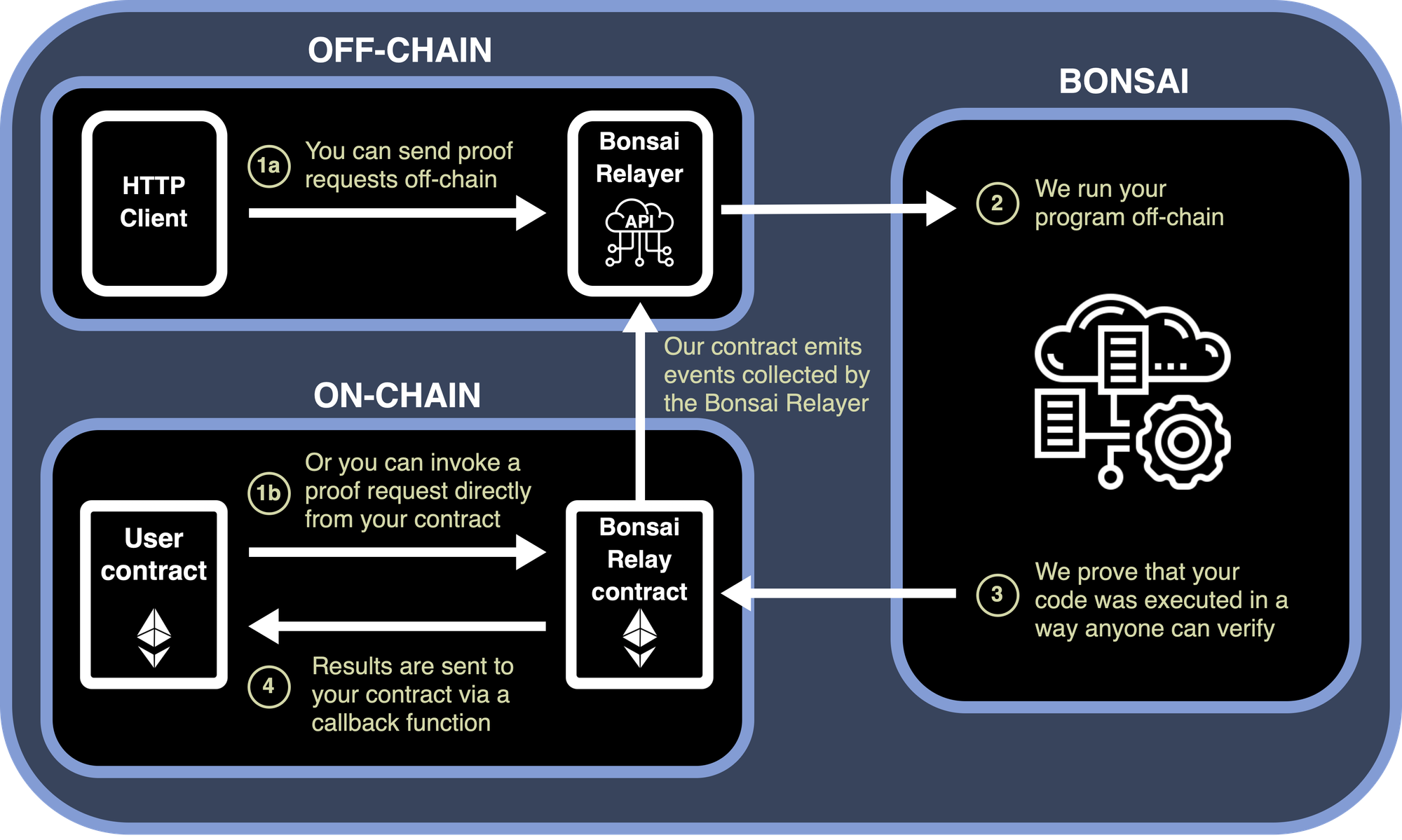

To provide a clearer understanding of how a typical ZK coprocessor operates, let's examine the workflow of RISC Zero Bonsai.

Applications send coprocessing requests to Bonsai Relay, which then forwards the proof request to the Bonsai proving service. The RISC Zero zkVM executes the program and generates a proof to validate the correct execution of the code, which can be verified by anyone. Subsequently, Bonsai Relay publishes the proof on-chain, and the applications receive the results through a callback function.

While ZK coprocessor is one method for achieving verifiable off-chain computations, alternatives such as MPC and TEEs offer different approaches. MPC enables collaborative computing on sensitive data, while TEEs provide secure hardware-based enclaves. Each option comes with its own set of trade-offs between security and efficiency. In this article, we will focus on ZK coprocessors.

Optimistic coprocessors offer cost-effective solutions, but they suffer from significant latency issues (typically weeks). They require honest parties to effectively challenge them with fraud proofs within the challenging window. Therefore, the time to security guarantees are delayed.

Cryptoeconomic coprocessors are optimistic coprocessors with a large enough economic bond on execution and an on-chain insurance system which allows others to secure compensation for erroneous computation. This economic bond and insurance can be purchased through shared security providers like Eigenlayer. The advantage is instant settlement, but the downside is the cost of acquiring insurance.

*There are proof generation times of less than a second out there (admittedly for small, optimized, proofs) and they're improving rapidly.

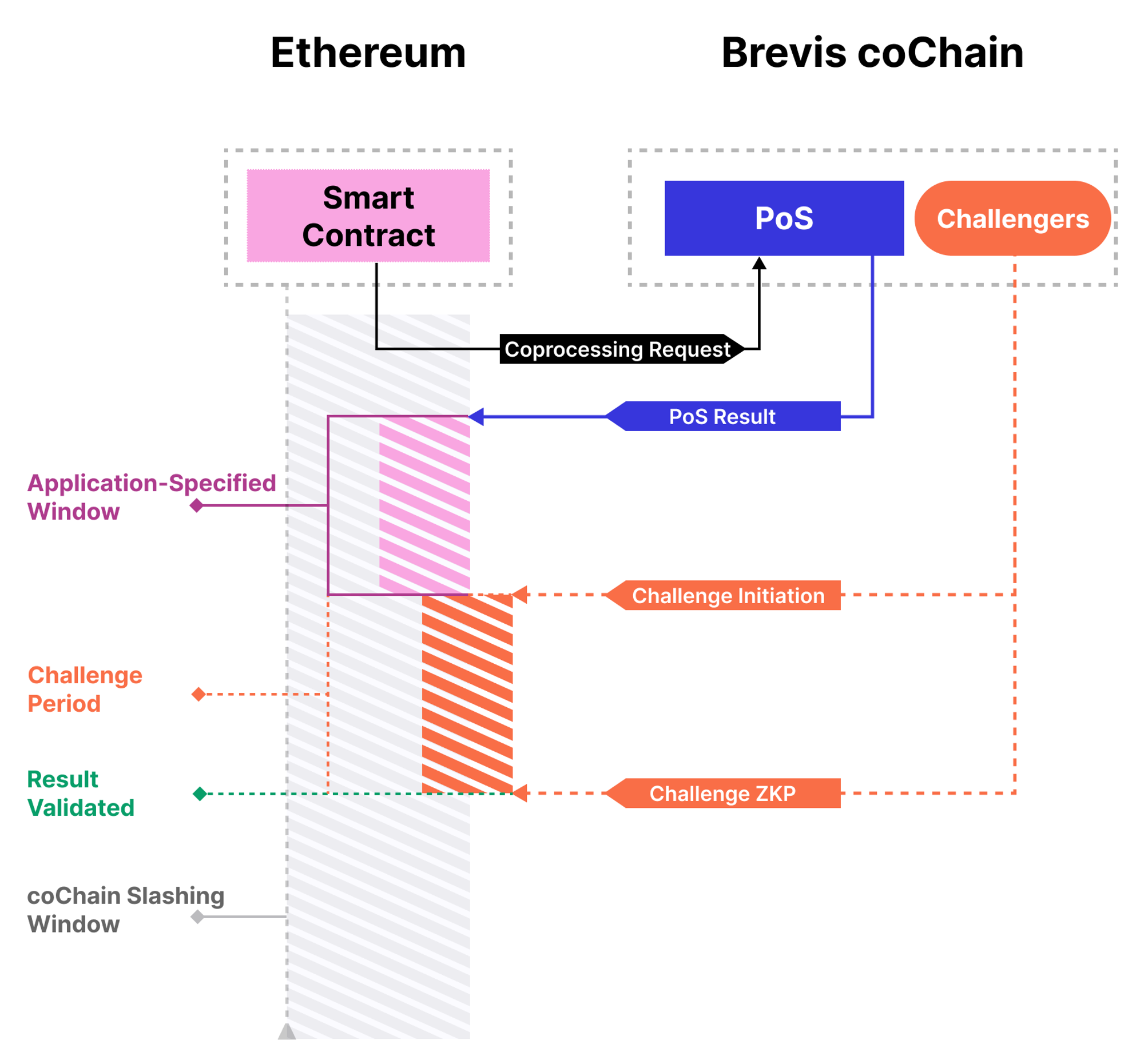

Different types of coprocessors exhibit distinct cost, latency, and security characteristics. Combining different types of coprocessors can lead to an optimized user experience. A standout example is Brevis. Initially launched with a zk-coprocessor, Brevis has now unveiled the Brevis coChain. This innovation combines crypto-economics and ZKP within a ZK coprocessor, resulting in reduced costs, minimized latency, and enhanced user experience.

Pure ZK coprocessors, in their current state, still present challenges such as high proof generation costs and scalability issues. This is because ZK proofs for data access and computation results are always generated upfront. Leveraging Eigenlayer’s restaking infrastructure, Brevis coChain enables dapps to tailor the level of crypto-economic security they desire, granting them greater flexibility to enhance the user experience. Here's a simplified explanation of how it operates.

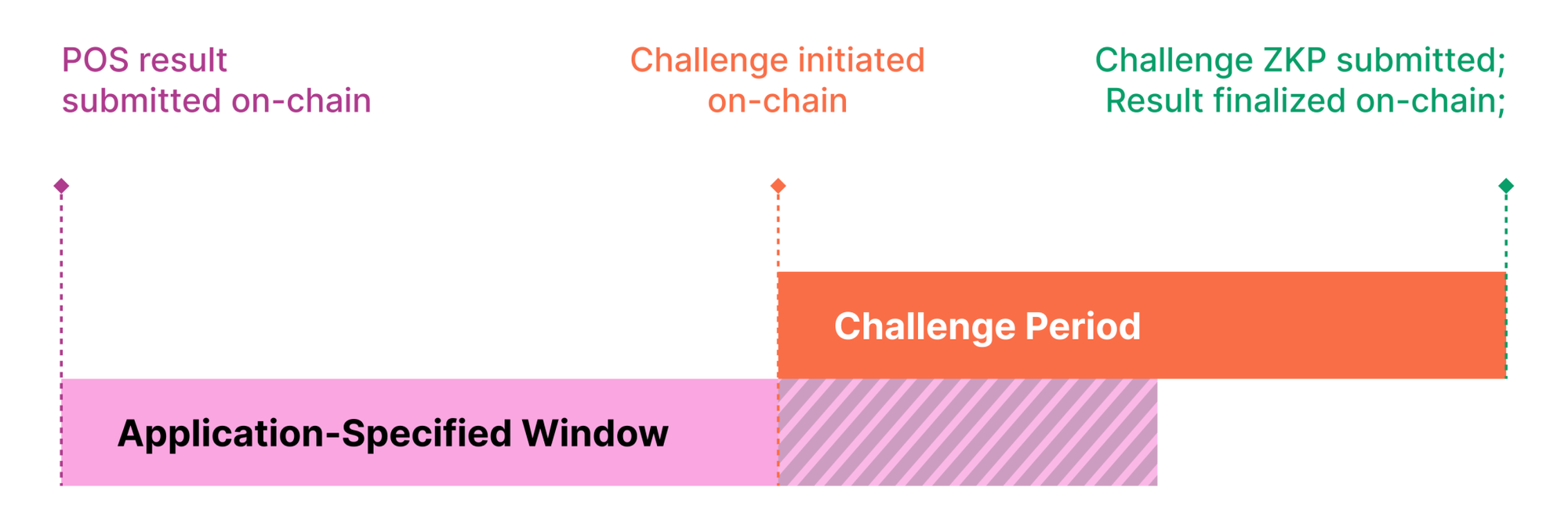

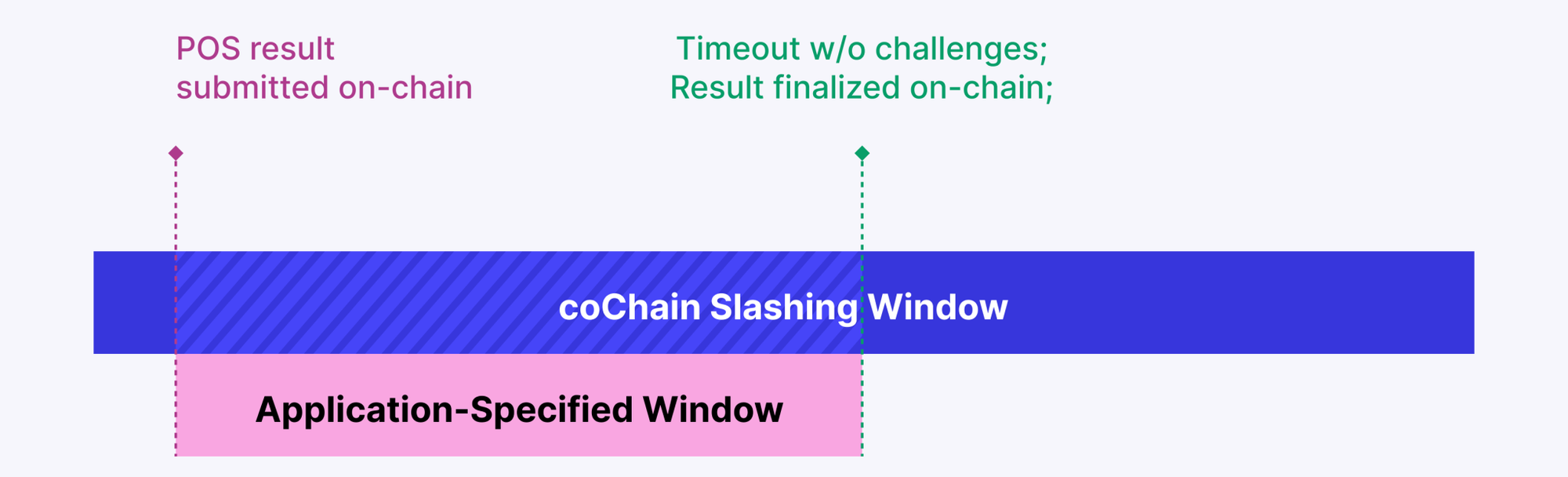

Brevis coChain would first 'optimistically' generate a result to the coprocessing request based on PoS consensus. Then, two challenge windows initiate, one is application-specific and configurable by developers, and the other one is the longer global coChain slashing window.

During the application challenge window, observers can submit a ZKP contradicting the coprocessing results. Successful challenges slash the proposer and reward the challenger. Failed proposals lead to the challenger’s bond being forfeited.

If there are no challenges, the app will deem the results valid. The global coChain slashing window is there for enhanced security. Even if an app accepts a faulty result, as long as the coChain slashing window is open, malicious validators can be slashed and incorrect results can be rectified.

As different types of coprocessors exhibit distinct cost, latency, and security characteristics, applications must assess their requirements to determine the type of coprocessors they need. If the computation involves high-security tasks, such as calculating balances of validators on the Beacon chain in liquid staking where billions of dollars are at stake, ZK coprocessors are the most appropriate choice. They provide maximum security since the results can be verified trustlessly. Additionally, latency is not a concern in such scenarios, allowing for the generation of proofs within acceptable timeframes.

For tasks that are less latency-sensitive and don't involve significant financial value, such as showcasing on-chain achievement metrics on your social profiles, an optimistic coprocessor that offers the lowest off-chain computation could be preferable.

For other tasks, cryptoeconomic coprocessors prove more cost-effective when the purchased insurance covers the at-risk value. The analysis of insurance costs should be done on a case-by-case basis, heavily influenced by the value facilitated by the application. These tasks often entail diverse analytics and risk modeling.

Another way to categorize coprocessors is by computation type, with examples such as:

-

SpaceandTime for database operations,

-

MegaETH for EVM computations, and more.

The use of coprocessors in DeFi is an emerging area that holds great potential. In the following, I will outline existing ideas and implementations on how coprocessors could be utilized in various sectors within DeFi including DEX, money markets, staking, restaking, etc.

DEX

There are multiple stakeholders involved in a DEX. These include traders, liquidity providers, market makers, liquidity managers, solvers/fillers, and more. Coprocessors have the potential to efficiently streamline complex tasks with different levels of trust assumptions, ultimately enhancing the experience for these stakeholders.

Cost Reduction

In a basic AMM, one important function is to compute the necessary parameters when users initiate a swap. These parameters include the amount to be swapped in and out, the fee, and the price after swapping. One straightforward use case to harness the computational power of zk-coprocessors while maintaining trust guarantees is to perform a portion of the swap function off-chain, and then complete the remaining steps on-chain. zkAMMs are a variant of Automated Market Makers (AMMs) that integrate zero-knowledge proofs in-protocol. Diego (@0xfuturistic) introduces an implementation of zkAMM (zkUniswap) based on Uniswap v3 where a portion of the AMM swap computation is offloaded to the Risc Zero zkVM. A user starts a swap by making a request on-chain, the swap inputs are picked up by the relayer, and the computation is carried out off-chain. The relayer then posts the output and the proof. The AMM verifies the proof and settles the swap.

While the computation cost is still comparable to that of EVM at the current stage, it is possible to achieve higher efficiency by parallelizing the computation of swaps with independent paths thanks to RiscZero’s continuation feature. Essentially, the execution of swaps can be done sequentially on-chain, but the actual swap steps can be computed in parallel off-chain using this approach. This enables the parallelization of the heaviest part for batches, which is not natively possible in the EVM. The cost of verification could also be amortized by batching multiple transactions together.

Users also have the option to use an alternative data availability layer to send swap requests. Another approach is to utilize EIP712 signature for off-chain propagation, which can further reduce swap costs.

Dynamic Parameters

Coprocessors could also be utilized to dynamically control the swap fee for an AMM pool. The concept of a dynamic fee is to increase the fee rate during periods of market volatility and decrease it during calmer market conditions. This serves as a benefit for passive liquidity providers, as they consistently take the unfavorable side of trades and experience value leakage through Loss-versus-rebalance (LVR). The implementation of dynamic fees aims to address this issue by adequately compensating LPs.

Some AMMs already have this feature. For example, Ambient utilizes an external oracle that monitors and takes snapshots of different fee tier Uniswap v3 pools every 60 minutes to choose the best performing one.

To provide further insights into adjusting the fee rate, additional data can be utilized, both on-chain and off-chain. This includes historical trades conducted on-chain for this particular AMM pool or for the same pair across various liquidity pools (such as the Ambient solution) or even pools on different networks. If certain trust assumptions are allowed, off-chain data (e.g. CEX trade data) from reputable oracles like Chainlink or Pyth, could also be introduced.

The decision on which types of coprocessors to use is influenced by how frequently the fee is adjusted. In cases where a pool requires very frequent dynamic fee changes, cryptoeconomic coprocessors may be more suitable. This is because the costs of proving are likely to outweigh the insurance costs, which can be estimated as the difference in fee rate multiplied by the average volume. In the event of any erroneous calculations, LPs can easily claim their insurance facilitated by Eigenlayer to compensate for their loss in fees.

On the other hand, there are pools that prefer less frequent fee rate changes. However, these pools handle very large volumes, which can drive up the cost of insurance buying. In such cases, ZK coprocessors are more suitable as they provide the strongest guarantee.

Active Liquidity Manager (ALM)

Passive liquidity provision can be an attractive option for less experienced users who want to earn fees from their idle liquidity without being overly concerned about price deviations. However, some liquidity providers (LPs) are more susceptible to losses caused by price deviations and statistical arbitrages. We previously discussed how adjusting fees dynamically could mitigate this issue. But why not go a step further and completely change the shape of the liquidity curve? This is a more sophisticated approach to liquidity management known as Active Liquidity Managers (ALMs).

Regrettably, the majority of existing ALMs only provide basic strategies like rebalancing, which have a limited impact on fee collection. On the other hand, slightly more advanced techniques such as hedging using money markets or derivatives are available. However, they either incur high costs when executed frequently on-chain or rely on centralized off-chain blackbox computation.

Coprocessors have the potential to tackle cost and trust issues, enabling the adoption of advanced strategies. By integrating with cutting-edge zero-knowledge machine learning (ZKML) solutions such as Modulus Labs and decentralized AI platforms like Ritual, liquidity managers can leverage complex strategies based on historical trade data, price correlations, volatility, momentum, and more while enjoying the advantages of privacy and trustlessness.

High-frequency trading strategies require precise timing and rapid execution. While ZK solutions may not always meet the necessary speed, cryptoeconomic coprocessors excel in this area. These coprocessors allow AI algorithms to be executed swiftly, with parameters updated as frequently as the block time allows. However, utilizing this approach comes with insurance costs. Accurately estimating these costs can be challenging due to potential risks like managers mishandling funds or engaging in counter-trades. The decision-making process involves balancing the additional returns against the insurance expenses, which ultimately depends on the total volume occurring within the coprocessor's measured timeframe. Scaling this process may also prove difficult based on the capital available for access in a single AVS and the ability to predict the value at risk at any given moment.

Metrics-Based Reward Distribution

While each transaction is recorded on the blockchain, smart contracts face challenges in determining the metrics these transactions represent, such as transaction volume, number of interactions, TVL per unit of time, etc. One might suggest using indexing solutions like Dune Analytics, which provide valuable information. However, relying on off-chain indexing introduces an additional layer of trust. This is where coprocessors emerge as a promising solution.

One particularly valuable on-chain metric is volume. For example, the accumulated volume within a specific AMM pool associated with a particular address within certain blocks. This metric is very beneficial for DEX. One use case is to allow for setting different fee tiers for users based on their trading volume. This approach is similar to dynamic fees, but instead of relying on general data, it looks at address-specific data.

Brevis provides an interesting example where volume proof could be combined with a customized fee rebate Uniswap hooks to offer volume-based fee rebates similar to VIP traders on CEXes.

Specifically, Uniswap v4 can read a user’s historical transactions in the past 30 days, parse each trade event with customized logic, and compute the trading volume with Brevis. The trading volume and a ZK Proof generated by Brevis are then trustlessly verified in a Uniswap v4 Hook smart contract, which determines and records the user’s VIP fee tier asynchronously. After the proof verification, any future trades of an eligible user will trigger the getFee() function to simply look up the VIP record and reduce trading fees for them accordingly.

The cost of getting certified as a "VIP" is also inexpensive (around $2.5 based on its performance benchmark results). Costs can be further reduced by aggregating multiple users using solutions like NEBRA. The only trade-off is the latency, as it took approximately 400 seconds to access and compute 2600 on-chain Uniswap transactions. However, this is less concerning for features that are not time-sensitive.

To address latency concerns, dapps could leverage Brevis’s coChain. Results are computed and delivered swiftly through a PoS consensus mechanism to minimize delays. In case of malicious activities, a ZKP can be used during the challenge window to penalize the rogue validators.

For instance, in the VIP fee scenario mentioned earlier, if over ⅔ of coChain validators deceitfully assign a higher VIP tier to certain users in a "VIP tier lookup table" linked to the dynamic fee hook, some users might initially receive larger fee discounts. However, when a ZK proof is presented during the slashing window, demonstrating that the VIP tiers are incorrect, the malicious validators will face penalties. The erroneous VIP tiers can then be rectified by enabling the challenge callback to update the VIP tier lookup table. For more cautious scenarios, developers can opt to implement extended application-level challenge windows, providing an additional layer of security and adaptability.

Liquidity Mining

Liquidity mining is a form of reward distribution intended to bootstrap liquidity. DEX could gain a deeper understanding of their Liquidity Providers' behavior through coprocessors and appropriately distribute liquidity mining rewards or incentives. It's important to recognize that not all LPs are alike; some act as mercenaries while others remain loyal long-term believers.

The optimal liquidity incentive should retrospectively evaluate the dedication of LPs, particularly during significant market fluctuations. Those who consistently provide support to the pool during such periods should receive the highest rewards.

Solver/Filler Reputation System

In a future focused on user intent, solvers/fillers play a crucial role by simplifying complex transactions and achieving faster, cheaper, or better results. However, there is ongoing criticism regarding the selection process for solvers. Current solutions include:

-

A permissionless system that utilizes Dutch auctions or fee escalators. However, this approach faces challenges in ensuring a competitive and permissionless auction environment, potentially resulting in latency issues or even non-execution for users.

-

A permissionless system requires staking tokens for participation, which creates a financial barrier to entry and may lack clear slashing/penalty conditions, or transparent and trustless enforcements.

-

Alternatively, a whitelist of solvers can be established based on reputation and relationship.

The path ahead should be both permissionless and trustless. However, in order to achieve this, it is necessary to establish guidelines for distinguishing between great solvers and those that are not so great. By utilizing ZK coprocessors, verifiable proofs can be generated to determine whether certain solvers meet or fail to meet these guidelines. Based on this information, solvers can be subjected to priority order flows, slashing, suspension, or even blacklisting. Ideally, better solvers would receive more order flows while worse solvers would receive fewer. It is important to periodically review and update these ratings to prevent entrenchment and promote competition, giving newcomers an equal chance to participate.

Manipulation-Resistant Price Oracle

Uniswap has already introduced embedded oracles in its v2 and v3 versions. With the release of v4, Uniswap has expanded the possibilities for developers by introducing more advanced oracle options. However, there are still limitations and constraints when it comes to on-chain price oracles.

Firstly, there is the consideration of cost. If a coprocessor computed price oracle can offer cost improvements, it could serve as a more affordable alternative. The more complex the designs of the price oracle, the greater the potential for cost savings.

Secondly, the on-chain price oracle pool is still susceptible to manipulation. To address this, it is common practice to aggregate prices from different sources and perform calculations to create a more manipulation-resistant price oracle. Coprocessors have the ability to retrieve historical trades from various pools, even across different protocols, enabling the generation of a manipulation-resistant price oracle with competitive costs for integration with other DeFi protocols.

DIA Data is working on ZK-based oracles with O(1) Labs from the Mina Ecosystem. The approach is similar - taking market data and performing more sophisticated calculations off-chain, free of gas costs and other execution constraints, but with the ability to verify integrity of the calculation as the result is served on-chain. This can make it feasible to supplement simple price feeds with other market data such as depth, to help assess liquidation impact, as well as metadata to enable protocols to customize their feed.

Margin Systems

To overcome the computational limitations of blockchain technology, many derivatives platforms frequently move certain components, such as risk management systems, off-chain.

@0x_emperor and @0xkrane propose an interesting use case of coprocessors where the margining logic is transparent and verifiable. In many exchanges, risk management systems are in place to prevent excessive leverage. One such example is the Auto Deleveraging System (ADL), which strategically allocates losses to profitable traders in order to offset the losses experienced by liquidated traders. Essentially, it redistributes the losses among profitable traders to cover the unpaid debts resulting from these liquidations.

Users may have questions regarding the forceful closure of their positions. To address this, the exchange could utilize coprocessors to execute margin engine logic using on-chain data and generate proofs to verify correct computation. Since ADL occurrences are infrequent, concerns about latency and proving costs are minimal. However, the use of trustless and verifiable Zk coprocessors enhances transparency and integrity, which is beneficial for the exchange and its users.

Money Market

By leveraging insights from historic on-chain data, coprocessors have the potential to enhance risk management for LPs and lending protocols. Additionally, protocols can offer improved user experience based on data-driven analytics.

When Curve experienced an exploit some months ago, attention turned to money markets with millions of CRV tokens at risk of liquidation. Frax lenders found some solace in the protocol's aggressive interest rate increases when the loan-to-value (LTV) ratio became unhealthy. This incentivized Curve founder to repay the debt more quickly. However, AAVE stakeholders expressed concerns and initiated discussions about reducing collateral capacity and potentially halting the market. Their fear was rooted in the possibility of insufficient liquidity for successful liquidations, which could result in bad debt and vulnerability to market conditions.

Fortunately, the crisis has been resolved. It is important to regularly review assets listed on money markets, with a particular focus on their liquidity in the market, especially during liquidation events. Illiquid assets should be assigned a lower loan-to-value (LTV) ratio and collateral capacity.

However, the decision-making process for risk parameter changes in money markets is often reactive, as we observed in the CRV situation. We need more prompt and proactive measures, including trustless solutions. There have been discussions regarding the use of Feedback Controls to dynamically adjust parameters based on on-chain metrics, such as liquidity utilization, instead of relying on a pre-determined curve. One intriguing concept involves a lending pool that verifies proof of on-chain liquidity for a specific market. The controller receives proof calculated from on-chain metrics by ZK coprocessors, indicating when an asset is no longer sufficiently liquid beyond a certain threshold. Based on this information, the controller can take various measures, such as adjusting interest rates, setting LTV caps, suspending the market, or even discontinuing it altogether.

More advanced strategies could include periodically adjusting the collateral borrow capacity or interest rate curve based on the previous week's on-chain liquidity. The exact threshold would be determined through discussions within the DAO. It could be determined by considering factors such as historic on-chain volume, token reserves, minimum slippage for a lump-sum swap, and so on.

For lenders and borrowers, money markets can provide enhanced services and experiences, similar to fee rebate programs for VIP traders in DEXs. There are existing credit score solutions that aim to create a comprehensive profile of on-chain users. The goal is to incentivize good behaviors, such as effective risk management demonstrated by avoiding liquidation events, maintaining healthy average LTV ratios, making stable large deposits, and more. Trustless rewards can be given for these positive behaviors, including better and smoother interest rates compared to average users, higher maximum LTV and liquidation ratios, a buffer time for liquidation, lower liquidation fees, and more.

Staking & Restaking

Trust-minimized Oracle

Since the Merge and the Shanghai/Shapella upgrade, the liquid staking market has become the largest market in DeFi. Notably, Lido has amassed over $29 billion TVL, while Rocketpool has over $3.6 billion TVL.

Given the substantial amount of money involved, it is important to note that the oracles used to report information, such as accurate balances of associated validators on the beacon chain, are still trusted. These oracles play a crucial role in distributing rewards to stakers on the execution layer.

Currently, Lido employs a 5-of-9 quorum mechanism and maintains a list of trusted members to safeguard against malicious actors. Similarly, Rocketpool operates with an invite-only Oracle DAO comprised of node operators who are trusted with updating reward information in the smart contracts on the execution layer.

However, it is essential to recognize that if a majority of trusted third parties were compromised, it could significantly harm liquid staking token (LST) holders and the entire DeFi ecosystem built on top of LSTs. To mitigate the risk of erroneous/malicious oracle reports, Lido has in place a series of sanity checks that are implemented in the execution layer code of the protocol.

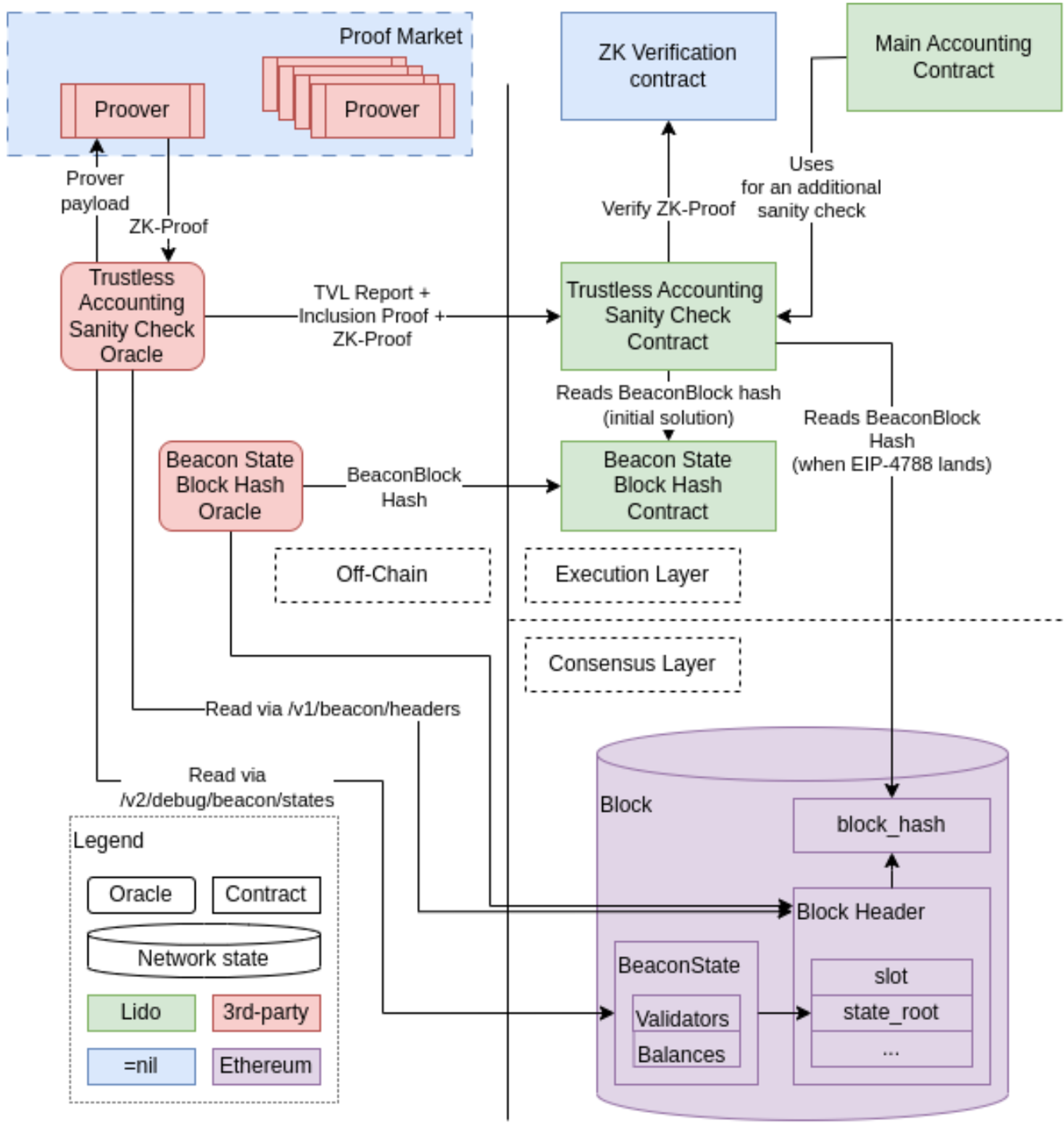

With the introduction of EIP-4788 "beacon block root in the EVM", it becomes easier for coprocessors to gain access to and compute over data on the consensus layer. =nill; Foundation, Succint and DendrETH are all developing their own ZK-proof TVL oracle for Lido. To ensure maximum security, Lido could utilize a multi-proof architecture.

Taking =nil;’s design for example, at a high level, the oracle obtains essential information from the Consensus and Execution layers, such as the Beacon Block Header, Beacon State, Lido contract addresses, etc. It then computes a report on the total locked value and validator counts for all Lido validators. These data, along with additional necessary information are passed to the proof producer and run on specialized circuits to generate a ZK proof. The oracle retrieves the proof and submits both the proof and its report to the smart contract for verification. Note that these oracle designs are still in the testing stage and are subject to changes.

However, it is worth noting that there will always be some sort of data that may not be provable on the EL side due to the limited nature of what is sent over via 4788 and that oracles may still be required for this subset of data.

Additionally, trust-minimized ZK-proof oracles are still in their infancy. The proposed approach by Lido contributors is to use the info provided by ZK oracles as a “sanity check” against the work done by the trusted oracles until these ZK implementations can be battle tested. It would be too risky to move all of the trust that’s currently in the oracle system to ZK systems at this stage.

Furthermore, the proofs for data of this size are very computationally heavy (e.g. can take even 30-45 minutes) and very expensive, so they are not a suitable replacement at the current maturation of the technology for things like daily or even intra-day reporting.

Validator Risk and Performance Analytics

Validators play a crucial role in the staking ecosystem. They lock up 32 ETH on the beacon chain and provide validating services. If they behave properly, they receive rewards. However, if they misbehave, they face slashing. Validators are run by Node Operators who have different risk profiles. They can be curated (e.g. Lido’s Curated Validator Set), bonded (e.g. Rocket pool, Lido’s CSM) or solo stakers. They may choose to run their services on cloud data centers or at home, in regions that are either crypto regulation-friendly or unfriendly. Additionally, validators can utilize DVT technology to split internal nodes or join into clusters for enhanced fault-tolerance. As Eigenlayer and various AVS (Actively Validated Services) emerge, validators could potentially offer additional services beyond validating for Ethereum. Undoubtedly, the risk profile of validators will be complex, making it essential to accurately assess their risk profiles. With good validator risk and performance analytics, it opens the door to endless possibilities, including:

First and foremost, risk assessment plays a crucial role in establishing a permissionless validator set. In the context of Lido, the introduction of the Staking Router and the future EIP-7002 "Execution layer triggerable exits" could pave the way for enabling permissionless joining and exiting of validators. The criteria for joining or exiting can be determined based on the risk profile and performance analytics derived from a validator's past validating activities.

Second, node selection in DVT. For a solo staker, it may be beneficial to choose other nodes to create a DVT cluster. This can help achieve fault tolerance and increase yields. The selection of nodes can be based on various analytics. Additionally, the formation of the cluster can be permissionless, allowing nodes with a strong historical performance to join while underperforming nodes may be removed.

Third, restaking. Liquid Restaking Protocols enable restakers to participate in the Eigenlayer restaking marketplace. These protocols not only produce liquid receipts called Liquid Restaking Tokens (LRT) but also aim to secure the best risk-adjusted returns for restakers. For instance, one of Renzo's strategies involves building the AVS portfolio with the highest Sharpe Ratio while adhering to a specified target maximum loss, adjusting risk tolerance and weights through DAO. As more AVS projects are launched, the importance of optimizing support for specific AVS and selecting the most suitable AVS operators becomes increasingly crucial.

So far, we emphasized the significance of validator risk and performance analytics, as well as the wide range of use cases it enables. However, the question remains: How do we accurately assess the risk profile of validators? One potential solution is being developed by Ion Protocol.

Ion Protocol is a lending platform that utilizes provable validator-backed data. It enables users to borrow ETH against their staked and restaked positions. Loan parameters, including interest rates, LTVs, and position health, are determined by consensus layer data and safeguarded with ZK data systems.

Ion is collaborating with the Succinct team on Precision—a trustless framework to verify the economic state of validators on Ethereum’s consensus layer. This aims to create a verifiable system that accurately assesses the value of collateral assets, mitigating any potential manipulation or slashing risks. Once established, this system could facilitate loan origination and liquidation processes.

Ion is also partnering with Modulus Labs, leveraging ZKML for trustless analysis and parametrization of lending markets, including interest rates, LTVs, and other market details to minimize risk exposure in the event of aberrant slashing incidents.

Conclusion

DeFi is truly remarkable as it revolutionizes the way financial activities are conducted, eliminating the need for intermediaries and reducing counterparty risks. However, DeFi currently falls short in providing a great user experience. The exciting news is that this is on the brink of change with the introduction of coprocessors that will empower DeFi protocols to offer data-driven features, enhance UX and refine risk management. Moreover, as decentralized AI infrastructure advances, we progress towards a future of Intelligent DeFi.

References

https://crypto.mirror.xyz/BFqUfBNVZrqYau3Vz9WJ-BACw5FT3W30iUX3mPlKxtA

https://crypto.mirror.xyz/8TXa9EqNkwjnQNenXscPwyHC6V99dmyhcO7uPYbeaIo

https://research.lido.fi/t/trustless-zk-proof-based-total-value-locked-oracle/3405

https://research.lido.fi/t/zkllvm-trustless-zk-proof-tvl-oracle/5028