Summary

Decentralized physical infrastructure networks (DePINs) and Decentralized virtual infrastructure networks (DeVINs), are necessarily essential components of technological advancement, given the heterogeneity of computational workloads, the increase in demand for highly performant infrastructure to run them, and the supply constraints which at current rates will worsen over time. While ambitious, to address some of the potential solutions to the network value optimization problem, this paper will explore two techniques that are used to systematically reduce money velocity of a network.

To generalize: It is inherently harder to bootstrap extremely performant information-centric resource networks which conversely implies that traditional methods of capital formation are far less effective at solving these supply-side problems. This opens up a surface area for DePIN to thrive. While end customers won’t especially care about DePINs, they do care about cost, latency, resilience, performance, verifiability, and specificity. DePIN is simply a capital formation framework that enables these characteristics in the systems consumers use.

Introduction

Physical networks are all around us: we use them to communicate with family, friends, and colleagues. Businesses rely on them for communication, information, and storage. Financial institutions rely on them for trusted market information to execute transactions. Without question, the economic status quo as we know it is a function of the speed of information available to make decisions. The world would certainly look very different if the speed required to engage in simple tasks reverted. This is intuitive when we are stuck watching a video buffer or struggling to maintain a clear WiFi connection during a meeting.

On demand-side mechanisms, internet connectivity has been found to improve access to global markets. Several studies find suggestive evidence that firms with better internet connectivity export and sell more. Research by Jochen Lüdering explores the relationship between low latency internet and economic growth. [1] The study introduces a novel measure of internet quality, specifically average latency for a country, and incorporates it into established models of economic growth. The findings largely confirm previous results, emphasizing the relationship between internet access and economic growth.

Governments and sovereigns recognize the implications of robust mesh networks as well. Earlier this year, President Biden urged the Department of Commerce to appropriate funding for high-speed internet infrastructure deployment through the Broadband Equity Access and Deployment (BEAD) program—a $42.45 billion grant program. This announcement is the largest internet funding announcement in United States history and aims to provide a minimum degree of connectivity to 8.5 million households and small businesses in areas with lacking internet infrastructure. [2]

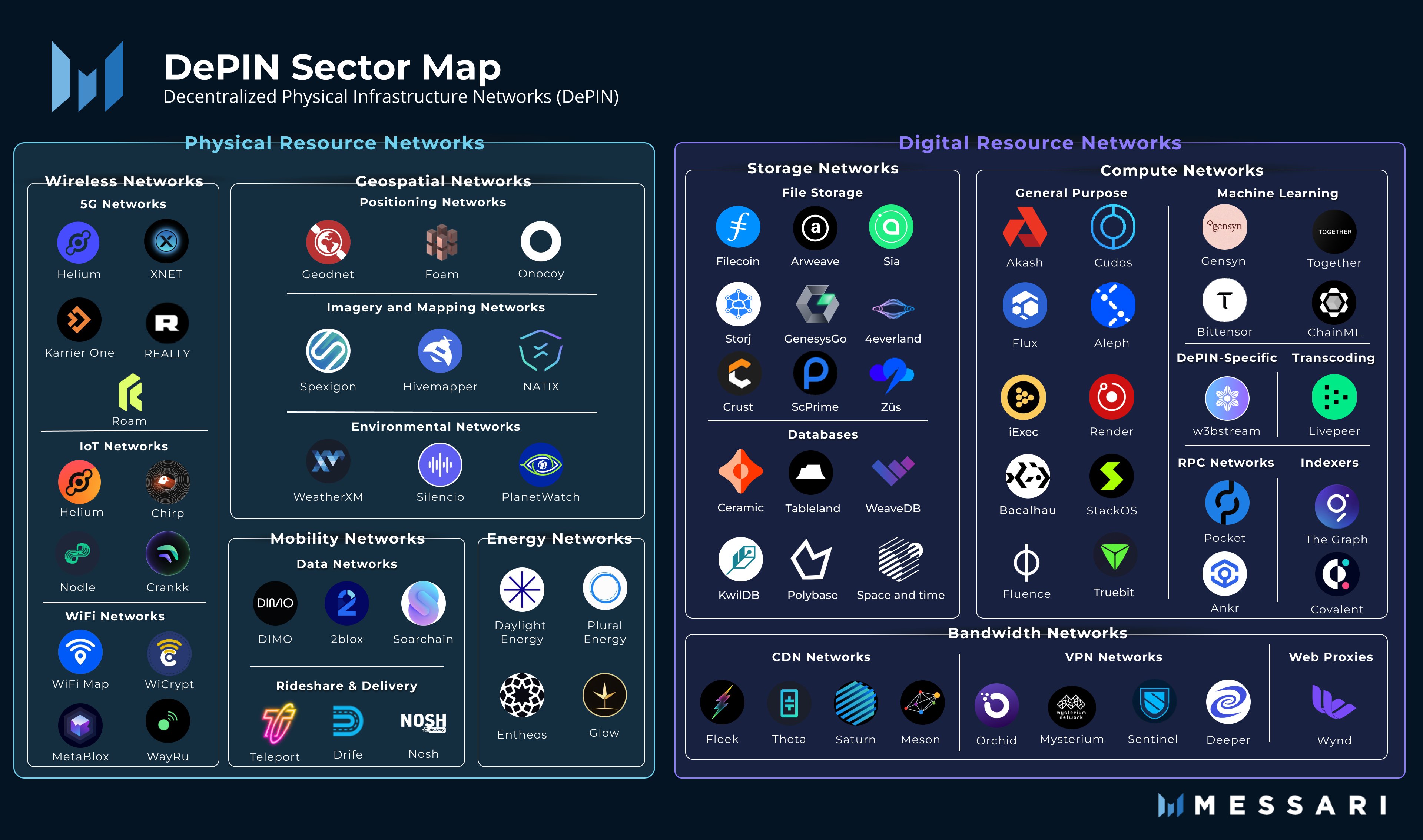

The applications of robust DePINs are extensive. Incumbent physical infrastructure networks supply much of the necessary plumbing needed for 5G or existing LoRaWAN networks for IoT devices. These networks tend to be cost-ineffective as the required infrastructure fails to scale economically. Consequently, systemic innovations to amortize CapEx requirements to efficiently scale these networks could create economic, environmental, and computational efficiencies. The following shows a comprehensive view of some of the applications which utilize DEPINs today.

Token Economics and Network Value Accrual

This section provides motivation as to why generalized work networks like some DePIN networks are intrinsically valuable. It will detail the two main economic models exhibited by most DePINs: the Work Token Model and the Burn and Mint Equilibrium model (BME).

Work Token Model

The Work Token model is a type of utility token model whereby a service provider stakes or bonds native tokens of the network to earn the right to perform work on its behalf. For services which are commodities such as distributed file storage, distributed video encoding, or off-chain verifiable computation, the probability that a given service provider is awarded the next job is proportional to the number of tokens staked as a fraction of total tokens staked. [3] As will be shown in this section, the impact of token velocity on a closed system generating FCF creates a powerful value creation dynamic, in which recursive increases in demand cause non-linear impacts on the system’s total utility.

As an example, Filecoin’s architecture requires service providers to contractually commit to storing some data for a period of time. Each contract stipulates that service providers must lock up some number of $FIL, and the data must be available for the duration of the storage contract with some minimum bandwidth guarantee. If the service provider violates the contract, they are automatically penalized by the protocol, and some of their staked tokens are slashed (burned). The work token model is predicated on assigning new jobs to service providers based on their staked tokens.

In a work token model, we’d note that any increase in supply-side revenue should drive more demand for the token, and thus a higher token price. Basic economic principles should stipulate a directly proportional relationship between the demand for network services and the price of the token. As demand increases, the utility of the network and the value of the tokens should theoretically increase, as more tokens are staked to perform work. To confirm that the relationship above can hold true when network utility is maximized, we’d seek to:

1 Establish token velocity as critical to the Network Value Optimization problem:

For utility tokens, $$Avg\ Network\ Value = \frac{Total\ Transaction\ Volume}{Velocity}$$ based on the NVT Ratio popularized by Willy Woo of CMCC Crest. NVT attempts to model the balance and divergences between network value assigned by the market, and the utility it brings to users in transactional throughput [4]:

$$$ \overline{U_{t}} = \frac{TxnVolume}{Velocity} $$$

If we believe that generally speaking $$Velocity$$ $$< Total\ txn\ volume$$ holds true for most tokens;

Then this difference causes these assets to trade at a discount to their “intrinsic” value. Until these assets achieve sufficient velocity to realize full intrinsic value, they trade at said discount given their liquidity profile.

Corollary Absent speculation, assets with high velocity will struggle to maintain long-term price appreciation. One can therefore reasonably deduce that mechanisms that encourage holding, not just usage should have positive expected values for protocols.

◾ Token Velocity is one of the key levers that will influence long-term, non-speculative value.

2 Confirm Work Tokens maximize Network Terminal Value

For a monetary base, we should value it using the equation of exchange: $$MV = PQ$$. Therefore $$M = \frac{PQ}{V}$$. This is the primary payment currency model.

Deduction yields that, absent any speculators, increased usage of the network will cause an increase in the price of the token. As demand for the service grows, more revenue will flow to service providers. Given a fixed supply of tokens, service providers will rationally pay more per token for the right to earn part of a growing cash flow stream.

Given Work tokens simply represent an enforcement mechanism for contractual obligations which service providers must fulfill in order to maintain their share of the network, an NPV valuation model can be used to value these networks.

However, terminal value in its conventional definition is not a sufficient heuristic to value a work token network. One potential fix that would alter the valuation framework would be to include the impact of operating cash flows into the proprietary payment currency model. To formalize the impact of money velocity on terminal value calculation we’d consider;

-

$$V$$: Velocity of Money - Rate at which money circulates in the economy.

-

$$i$$: Inflation Rate - A function of $$V$$, where higher $$V$$ typically implies higher $$i$$.

-

$$g$$: Growth Rate of Cash Flows - Influenced by economic conditions, which are impacted by $$V$$.

-

$$r$$: Discount Rate - Adjusted based on inflation $$(i)$$, where $$r = r_{real}+i.$$

-

$$CF_{n+1}$$: the cash flow at period $$n+1$$.

Then we have the Terminal Value equation considering constant growth model:

$$TV = \frac{CF_{n+1}}{(r-g)}$$

In this construct, changes in velocity $$V$$ can indirectly affect $$g$$ (via economic growth changes) and $$r$$ (via inflation adjustments), thereby altering the Terminal Value.

Filecoin should capture ~100x more value in this model than in the proprietary payment currency model (discussed later) given a discount rate of 40% and operating margins of 50%. [5]

A decrease in money velocity ($$V$$) typically leads to lower inflation ($$i$$). Lower inflation affects the discount rate ($$r$$) by potentially lowering it, since $$r = r_{real}+i$$. If $$r$$ decreases while growth rate ($$g$$) remains constant or also decreases (due to slowing state growth), the denominator in the Terminal Value ($$TV$$) formula, $$(r - g)$$, becomes smaller. A smaller denominator leads to a higher $$TV$$. Thus, a substantial decrease in $$V$$, indicative of a more durable network with less reliance on emissions, would inversely and proportionally increase the Terminal Value.

Corollary In the work token model, as a network grows and matures, it de-risks, decreasing the discount rate, and ultimately increasing the terminal value at equilibrium (implying that total token value should grow superlinearly relative to total transaction throughput). The work token model thus reduces token velocity without adjusting the parameters of M in the equation of exchange, as tokens are staked or locked on the supply side of the network.

◾ Tokens that represent the right to perform work on behalf of a network should be valued at a multiple of the operating cash flows that the system generates.

BME Model

The BME model is the other predominant model used to ensure economic stability and achieves this objective via a proprietary payment currency system. This model is unique insofar as supply-side participants aren’t required to stake tokens to provide services to the network. Rather, increases in supply-side revenue should drive more demand for the token, and thus a higher token price. The supply-side of the market is rewarded with inflationary tokens based on how much work they've done. Uniquely in the BME model, tokens that are burned are eventually reintroduced as inflationary tokens, which enables protocols to operate with a fixed token supply and offer perpetual incentives. The model introduces a speculative component to total network value whereby the expected utility, $$\overline{U_{t}}$$ also includes some non-zero constant that contributes to either upward or downward price pressure. The BME model translates protocol usage into token buying pressure.

In the case of a project like Helium, enterprises and developers use Data Credits (DCs) to pay transaction costs for wireless data transmissions on the network. DCs are USD-pegged utility tokens derived from HNT and are strictly produced via burning HNT. This HNT to DC relationship is based on a burn/mint equilibrium. Regardless, the price maximization function for HNT is dependent on variables such as network usage/demand for DCs which are intrinsically valuable to network participants to transfer data, onboard, or assert locations of hotspots.

Central to network value maximization of the BME model is the asymmetry of burning and mining and the relative price pressure impacts of each. In the BME model, the terminal value is influenced by the dynamic balance between token burning and minting, affecting the token supply and its price. In contrast, in the Work Token model, the terminal value is more directly tied to the demand for network services and the utility those services provide, influencing the value of the work tokens. To formalize, given:

-

$$B_t$$: Tokens burned at time $$t$$.

-

$$M_t$$: Tokens minted at time $$t$$.

-

$$S_t$$: Total supply of tokens at time $$t$$.

-

$$P_t$$: Price of the service at time $$t$$.

The equilibrium of the BME model is thus,

$$S_{t+1} = S_t+ M_t - B_t$$

-

If $$B_t > M_t$$ , then$$ P_{t+1} > P_t$$ (upward pressure on price).

-

If $B_t < M_t $, then $$P_{t+1}< P_t$$ (downward pressure on price).

Comparative Analysis

In theory, the Work Token model should accrue more value to individual operators than the BME model, however the BME model introduces the variable of moneyness into the overall utility function which likely introduces some premia to terminal network value. It’s still likely too early to see empirically the explicit differences in value accrual between these two models.

In Work Token based networks, service providers bid on block production as a function of their stake, and the builder then provides a commoditized service to the network. For example, work tokens are applicable for most decentralized cloud services. These services can use the work token model because they provide undifferentiated commodity services. While work token networks statistically accrue the greatest value for undifferentiated commoditized services, BME modeled networks should accrue more value among heterogeneous service-based networks.

In BME modeled networks, excess token supply floats in the market with some degree of moneyness, in other words, these goods are at least Intermediate Menger Goods with some price premium for use as a store of value against FX-related assets. Samani has suggested that there is no universal formula model that can be used to calculate non-speculative value. To generalize, there theoretically should always be a means to derive total utility generated from work token networks; however BME networks are not symmetric in this way, in that the total utility has a speculative component that can drive terminal values higher. Ultimately, it’s yet to be determined how well the BME model captures value but could potentially be valued at a multiple of demand-side revenue.

Primitives

Following first principles, the following set of infrastructures may precede dApps temporally:

1 dCDN and dStorage Markets

Decentralized CDNs will likely serve as information conduits, efficiently delivering web content and services. They will use a distributed network of servers, often located closer to the end-users, to reduce latency and increase speed in accessing web content like videos, images, and web pages. There may exist vertically integrated solutions that offer specialized CDN services for certain types of content. Over time, these services may aggregate, but the mechanism by which the network functions will remain decentralized.

Storage Networks, such as Sia and Storj on the other hand, are primarily designed for storing data across a distributed network that can be less geographically specific than CDNs. These networks break down data into smaller fragments (data sharding), encrypt them, then distribute and store them across multiple nodes. This eliminates the need for third-party trust, removes single points of failure, and increases data security and privacy — all while being environmentally sustainable and cost-effective. [6]

CDNs are optimized for content delivery speed, while storage networks focus on secure, distributed data storage. Both may be of importance to edge computing applications. dCDN and dStorage are incredibly significant because they drastically reduce both the costs and speeds with which information is transacted and accessed. The implications of this will be addressed in the next section, some of which are quite massive.

2 Compute Markets

Particularly interesting, given current interest in the AI/ML applications of decentralized networks, are compute markets. The demand for computational resources has ballooned to the point where it is unequivocally set to outpace the rate of hardware production.

Many deep learning models employ neural network overparameterization, often called implicit regularization, due to there existing no explicit upper bound in model learning parameters. These architectures significantly improve model learning over time. The challenge of overparameterization is that the number of deep learning parameters must scale at least quadratically as the number of data points grows which is NP hard for even extremely small networks. Since the cost of training a deep learning model scales with the product of the number of parameters and the number of data points, this implies that a linear improvement in performance generally requires a faster-than-linear increase in the amount of training data. [7], [8]

This exaggerated surge in demand for computational resources and its subsequent economic impacts are explored by Thompson et al, who discuss the computational limits of ML. Their research catalogs the magnitude of the dependency trend below, showing that progress across a wide variety of applications is strongly reliant on increases in computing power. Extrapolating forward this reliance reveals that progress along current lines is rapidly becoming economically, technically, and environmentally unsustainable. [9]

Empirically, TSMC's expansion plans indicate that it will take around two years to equip the shell of its new facilities. For instance, the shell of TSMC's Fab 21 in Arizona is expected to be complete by early 2025, after which it will take around another two years to equip. [10] Timelines indicate that it could be anywhere between 2-5 years for these plants to be production-ready, while shortages in skilled labor, among other factors, have already delayed progress in Arizona by over a year. Despite even optimistic estimates, global demand for computational resources is not slowing down. In fact, it is increasing with greater velocity than ever.

Given the above, it’s implicit that software must conform to hardware since the incumbent compute market is hardware-constrained. We’ve already begun to see this phenomenon play out, as decentralized compute marketplaces like Gensyn take the stage to directly reward supply-side participants for pledging their compute time to the network and performing ML tasks. [11]

There will likely be a handful of valuable marketplaces due to the diversity of this market and its idiosyncrasies and vertical heterogeneity.

3 Bandwidth Markets

Decentralized bandwidth markets are possibly the most fundamental primitive, given that it’s an a priori resource for validators to ensure security and liveness. The key areas of focus within bandwidth markets are, again, tailored to solving for the cold start problem on the supply side and tailoring to the heterogeneity of demand.

Marketplaces for bandwidth ultimately enable much more efficient creation of proxy networks, a construct which is not entirely novel (we use such proxy networks when establishing a VPN wireless connection). Proxy networks uniquely create data efficiencies which could serve as an element of a greater solution to the compute scaling dilemma noted above.

Markets like Wynd Networks’ Grass or GIANT reward participants with native tokens. These service providers are a diverse set of individual residential proxies, hotspot aggregators, inflight wireless providers, fixed broadband ISPs, and decentralized ISPs. [12] The dISP approach distributes workloads by spreading data processing tasks across multiple nodes, reducing the load on any single system while simultaneously reducing latency and improving overall computational efficiency.

The aforementioned markets allow for the repurposing of unused bandwidth for profit, creating a system where users can become the ISPs and where bandwidth can be tokenized and traded as a marketable asset. In other words, the conception of bandwidth marketplaces could represent a larger shift to a “DTC internet services” regime, where users have democratized access to internet services and an ownership over their data/bandwidth/etc. [13] Solvency here would look like low-cost access to the internet, high-speed connectivity, and end-to-end encryption for privacy and security.

Opportunity Zones, Applications, and Areas of Further Investment

Vertically Integrated Transaction Archival

Inherently, not all transactions are equally valuable. Historically, one could look to TVL within a smart contract to determine its value, although we’d expect that as the constituent content of a transaction becomes more diverse, the value of transaction archival will likely increase in parallel. As a percentage of the number of total on-chain transactions, the overwhelming majority to date are not valuable. If the content within the underlying transaction becomes increasingly valuable by some monetary or Mengerian measure, then the surface area for applications that can exist on top of that plane subsequently should become valuable.

Decentralized Cloud Compute Networks

Cloud computation refers to the delivery of computing services – including servers, storage, databases, networking, software analytics, and intelligence – over the cloud to offer scalable resourcing and benefits from economies of scale. To date the largest providers of these services have been Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP) due to their ability to source the capital requirements to run and host these networks. Decentralized alternatives, while nascent, could unbundle this suite of services and reduce costs, especially for customers who seek more bespoke service offerings or those with variable demand over time.

Both public, private, and hybrid network deployment models could work in this scenario, and we would imagine varying degrees of cost for each. Companies like Antimetal have clearly corroborated the “one size certainly does not fit all” characteristics of incumbent solutions and seek to arbitrage the opportunity. In addition to cost reduction, trusted computation is an area for focus. There are obvious negative externalities associated with centralized compute networks, especially when they own and control resources outright.

This list is not comprehensive.

Credits

Thanks to Rajiv (Framework), Mads (Maven11), Sami (Messari), Ani (Dragonfly), Chase (Space & Time), Dougie (Figment Capital), and Jonah (Blockchain Capital) for their thoughtful discussion and review leading up to the publishing of this post.

Disclosures

The views and information provided are as of January 2024 unless otherwise indicated and are subject to frequent change, update, revision, verification and amendment, materially or otherwise, without notice, as market or other conditions change. The views expressed above are solely those of the author and there can be no assurance that terms and trends described herein will continue or that forecasts are accurate. Certain statements contained herein are statements of future expectations or forward-looking statements that are based on the author’s views and assumptions as of the date hereof and involve known and unknown risks and uncertainties.

This material is for informational purposes only and is not an offer or a solicitation to subscribe to any fund and does not constitute investment, legal, regulatory, business, tax, financial, accounting or other advice or a recommendation regarding any securities or assets. No representation or warranty, express or implied, is given as to the accuracy, fairness, correctness or completeness of third party sourced data or opinions contained herein and no liability (in negligence or otherwise) is accepted by for any loss howsoever arising, directly or indirectly, from any use of this document or its contents, or otherwise arising in connection with the provision of such third-party data.